Máté Aller

Postdoctoral Research Associate

MRC Cognition and Brain Sciences Unit

University of Cambridge

Biography

I am a cognitive computational neuroscientist with a background in medicine. My research focuses on human speech perception using non-invasive neurophysiological recordings (MEG, EEG), and state-of-the-art signal processing and machine learning approaches. The overarching aim of my work is to build better assistive speech technologies and AI speech recognition systems.

Interests

- Speech Perception

- Audio-visual perception

- MEG/EEG

- Machine Learning

- Automatic Speech Recognition

Education

PhD in Cognitive Neuroscience, 2019

University of Birmingham

General Medicine (MD), 2009

University of Szeged

Projects

*

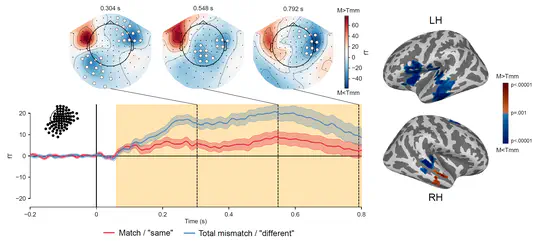

M/EEG project to understand the temporal dynamics of sharpeining and prediction error computations in the brain during speech perception.

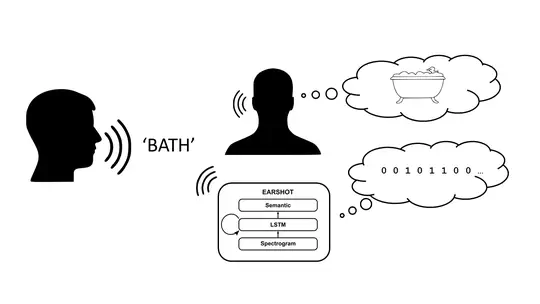

Combining neural network modelling, psycholinguistic tests and machine learning to compare the behavior and internal computations of automatic speech recognition (ASR) systems with human speech perception

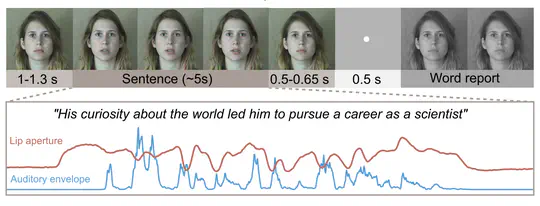

Speech perception in noisy environments is enhanced by seeing facial movements of communication partners. However, the neural mechanisms by which audio and visual speech are combined are not fully understood. This project aims to elucidate these neural mechanisms.

Publications

Quickly discover relevant content by filtering publications.

(2022).

Audiovisual adaptation is expressed in spatial and decisional codes.

Nature Communications.

(2022).

Differential auditory and visual phase-locking are observed during audio-visual benefit and silent lip-reading for speech perception.

Journal of Neuroscience.

(2019).

To integrate or not to integrate: Temporal dynamics of hierarchical Bayesian causal inference.

PLOS Biology.

(2018).

Invisible Flashes Alter Perceived Sound Location.

Scientific Reports.

(2016).

ATP-Evoked Intracellular Ca2+ Signaling of Different Supporting Cells in the Hearing Mouse Hemicochlea.

Neurochemical Research.