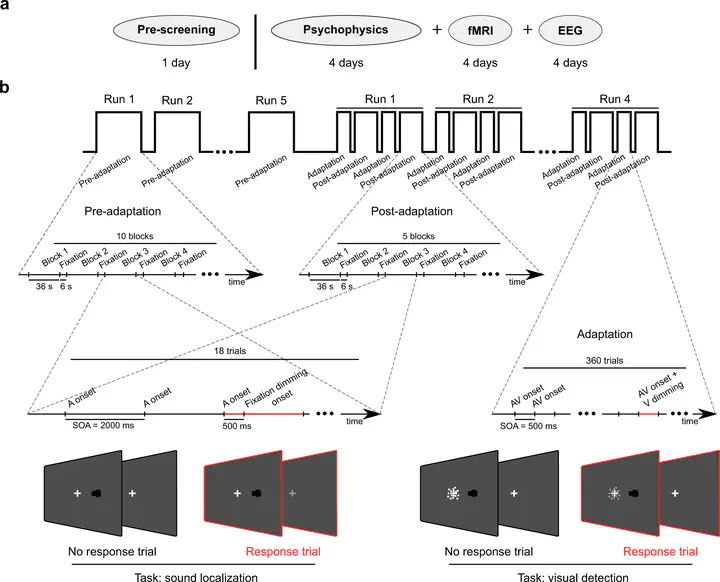

The brain continuously adapts to changing sensory statistics in its environment. Recent work has begun to reveal the neural circuits and representational codes that support this cross-sensory plasticity. In this project, we combined psychophysics with model-based representational fMRI and EEG to investigate how the adult human brain recalibrates when auditory and visual spatial signals are misaligned.

I applied support vector machines to decode spatial representations from neural data (fMRI, EEG) during audiovisual adaptation and compared these representations using pattern component modelling. The findings were published in Nature Communications, and the source code is freely available in the accompanying GitHub repository.

This work was conducted in collaboration with Agoston Mihalik and Uta Noppeney.