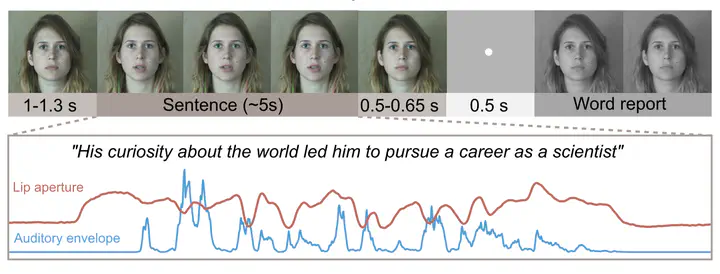

I used time-frequency and partial coherence analyses on MEG recordings of human listeners to demonstrated that understanding of degraded speech is improved by visual speech signals boosting neural synchronization to auditory speech. I also co-supervise a doctoral researcher who is currently investigating the individual differences in audio-visual benefit in speech perceptions and their underlying neural mechanisms in a normally hearing population. In the long run we are planning to extend these insights to hearing impaired individuals and explore ways to add support for visual speech in a hearing prosthesis. See below for associated publications, code and data.