Differential auditory and visual phase-locking are observed during audio-visual benefit and silent lip-reading for speech perception

Abstract

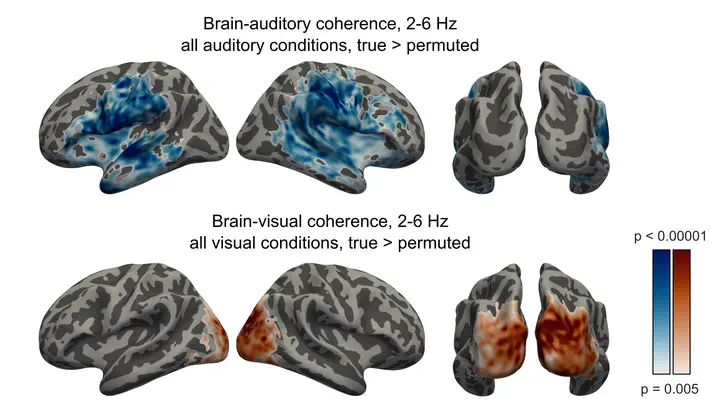

Speech perception in noisy environments is enhanced by seeing facial movements of communication partners. However, the neural mechanisms by which audio and visual speech are combined are not fully understood. We explore MEG phase locking to auditory and visual signals in MEG recordings from 14 human participants (6 females, 8 males) that reported words from single spoken sentences. We manipulated the acoustic clarity and visual speech signals such that critical speech information is present in auditory, visual or both modalities. MEG coherence analysis revealed that both auditory and visual speech envelopes (auditory amplitude modulations and lip aperture changes) were phase-locked to 2-6Hz brain responses in auditory and visual cortex, consistent with entrainment to syllable-rate components. Partial coherence analysis was used to separate neural responses to correlated audio-visual signals and showed non-zero phase locking to auditory envelope in occipital cortex during audio-visual (AV) speech. Furthermore, phase-locking to auditory signals in visual cortex was enhanced for AV speech compared to audio-only (AO) speech that was matched for intelligibility. Conversely, auditory regions of the superior temporal gyrus (STG) did not show above-chance partial coherence with visual speech signals during AV conditions, but did show partial coherence in VO conditions. Hence, visual speech enabled stronger phase locking to auditory signals in visual areas, whereas phase-locking of visual speech in auditory regions only occurred during silent lip-reading. Differences in these cross-modal interactions between auditory and visual speech signals are interpreted in line with cross-modal predictive mechanisms during speech perception.